Unlocking the Power of AI

🚀 Unlocking the Power of AI with Dr. Bill and Dr. Sue Tripathi 🚀

In the latest episode of the Dr. Bill 360 Podcast, we dive deep into the world of Artificial Intelligence (AI) and its transformative impact on our personal, professional, and organizational lives. 🌍✨

🎙️ Episode Highlights:

- Understanding AI Revolution: Dr. Sue Tripathi demystifies AI, explaining its different types like Machine Learning, Natural Language Processing, and Cognitive AI. She emphasizes how AI simulates human intelligence to process data and make decisions faster and more efficiently.

- AI in Everyday Life: Dr. Tripathi uses relatable examples to show how AI is already integrated into our daily lives. From retail decisions to delivery robots, AI is reshaping the way we interact with the world around us.

- Professional Impact: We explore how AI is revolutionizing industries like banking, healthcare, and manufacturing. Dr. Tripathi highlights the importance of data quality and the role of AI in enhancing efficiency and decision-making processes.

- Future of AI: Dr. Tripathi discusses the potential future developments in AI, including general AI and superintelligence. She addresses common fears and misconceptions, emphasizing the need for ethical practices and regulation.

- Personal Development: Practical advice on how individuals can stay relevant in an AI-driven world. Dr. Tripathi encourages continuous learning and upskilling to remain competitive in the evolving job market.

💡 Why You Should Listen:

- Gain a clear understanding of AI and its various applications.

- Learn how AI is transforming industries and creating new opportunities.

- Get insights into the ethical considerations and future developments in AI.

- Discover practical steps to enhance your skills and stay ahead in an AI-driven world.

Understanding Neural Networks 🤖

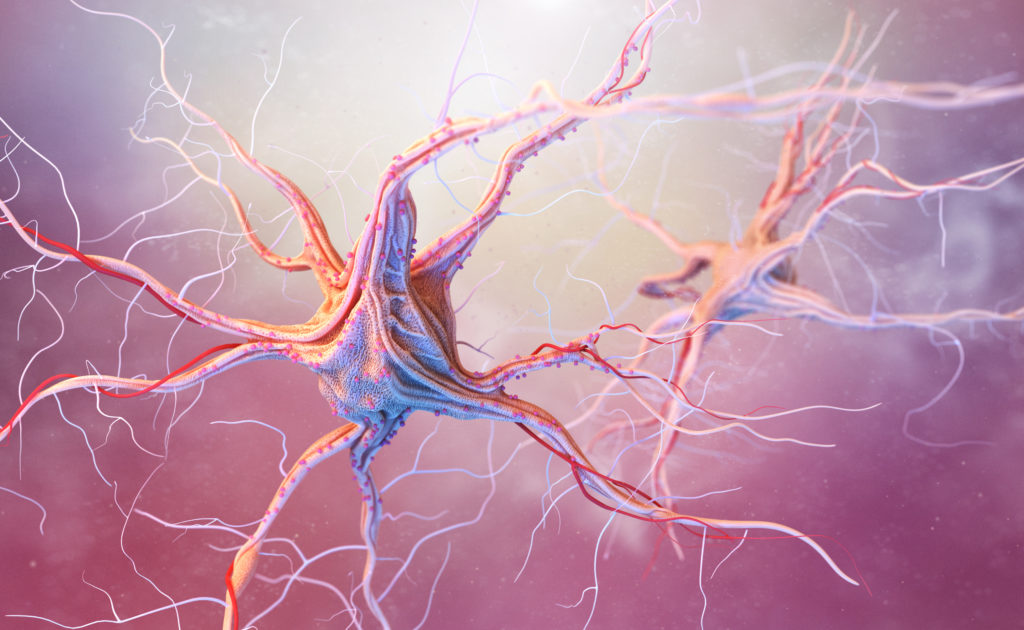

In both biology and artificial intelligence, neurons are the fundamental building blocks of complex systems. To understand how machine learning works, let’s explore the parallels between biological neurons and their artificial counterparts.

Biological Neurons

A biological neuron is a specialized cell in the nervous system that processes and transmits information. It has three main components:

- Dendrites: These are branch-like structures that receive signals from other neurons. They act as the input channels for the neuron.

- Nucleus: This is the control center of the neuron, located in the cell body (soma). It processes the incoming signals.

- Axon: A long, thread-like structure that transmits signals away from the neuron to other neurons or muscles. It acts as the output channel.

When a neuron receives enough input signals through its dendrites, it generates an electrical impulse that travels down the axon, transmitting the information to other neurons.

Artificial Neurons (Perceptrons)

In machine learning, an artificial neuron, known as a perceptron, mimics the function of a biological neuron. It is the basic unit of a neural network and has a similar structure:

- Inputs (analogous to Dendrites): These are the signals or data points fed into the perceptron. Each input has an associated weight that determines its importance.

- Processing Unit (analogous to Nucleus): This unit sums the weighted inputs and applies an activation function to determine the output. The activation function decides whether the perceptron should activate and send a signal.

- Output (analogous to Axon): The processed signal is then transmitted to other perceptrons in the network.

The perceptron adjusts its weights during training to minimize the error in its predictions, effectively “learning” from the data.

Neural Networks

A neural network is a collection of interconnected perceptrons arranged in layers:

- Input Layer: This layer consists of perceptrons that receive the initial data.

- Hidden Layers: These layers perform complex transformations and feature extraction by processing the inputs through multiple interconnected perceptrons.

- Output Layer: This layer produces the final output, such as a classification or regression result.

The network adjusts the weights of the perceptrons through a process called backpropagation, which minimizes the error in its predictions by learning from the training data.

Machine Learning: The Goal

Machine learning aims to create models that can generalize from examples to make predictions or decisions. Here’s what it attempts to do:

- Pattern Recognition: Identify patterns and relationships within data that are not explicitly programmed.

- Generalization: Apply the learned patterns to new, unseen data to make accurate predictions.

- Automation: Automate decision-making processes by learning from data and improving over time.

Conclusion

By drawing parallels between biological neurons and artificial perceptrons, we can better understand how neural networks function. These networks are at the core of machine learning, enabling machines to learn from data, recognize patterns, and make intelligent decisions, mimicking the brain’s ability to process complex information.

The Crucial Role of Real Data in AI: Realizing the Impact on Model Performance and Fears

In the realm of artificial intelligence (AI) and machine learning, the quality and authenticity of data used for training models are paramount. Using real, high-quality data is not just beneficial but essential for developing reliable and effective AI systems. Here’s why it matters and the potential risks associated with poor-quality data.

Why Real Data is Essential

Accurate Representation of the Real World:

- Real data captures the complexity and variability of real-world scenarios. It includes the nuances, patterns, and anomalies that artificial data might miss.

- Training models on real data helps ensure that AI systems can handle diverse situations and make accurate predictions when deployed in real-life applications.

Improved Generalization:

- Models trained on real data learn to generalize better to new, unseen data. This means they can apply learned patterns to different contexts, improving their robustness and adaptability.

- Real data provides a variety of examples, helping models distinguish between significant features and noise.

Higher Reliability and Validity:

- Using real data increases the reliability and validity of AI models. Models trained on artificial or biased data may perform well in controlled environments but fail in real-world conditions.

- Authentic data helps in creating models that truly reflect the dynamics of the problem domain, leading to more trustworthy outputs.

Consequences of Poor-Quality Data

Model Bias and Fairness Issues:

- Poor-quality data can introduce biases into AI models. If the data is not representative of all segments of the population or contains inherent biases, the model will learn and perpetuate these biases.

- This can lead to unfair treatment of certain groups, reinforcing existing inequalities and potentially causing harm.

Inaccurate Predictions:

- Models trained on incomplete, noisy, or inaccurate data are likely to make erroneous predictions. This can be particularly dangerous in critical applications like healthcare, finance, and autonomous driving.

- Inaccurate predictions undermine the credibility of AI systems and can have severe consequences, including financial losses, safety risks, and ethical violations.

Realization of Greatest Fears:

- One of the greatest fears in AI is the development of systems that behave unpredictably or cause unintended harm. Poor-quality data can exacerbate this fear by leading to models that make unreliable or harmful decisions.

- For example, in healthcare, a model trained on biased or inaccurate data could misdiagnose patients or recommend inappropriate treatments, endangering lives.

Reduced Model Performance:

- The performance of AI models is directly tied to the quality of the training data. Poor-quality data can lead to models that are overfitted, underfitted, or simply ineffective.

- This results in wasted resources, as the models fail to deliver the expected outcomes and require retraining with better data.

Ensuring Data Quality

Data Collection:

- Collect diverse and representative data that accurately reflects the real-world conditions in which the AI system will operate.

- Ensure that the data is comprehensive, covering all relevant aspects and scenarios.

Data Cleaning:

- Process and clean the data to remove noise, inconsistencies, and errors. This step is crucial for maintaining the integrity of the dataset.

- Address missing values, outliers, and any other issues that could compromise the quality of the data.

Bias Mitigation:

- Identify and mitigate biases in the data. This involves analyzing the dataset for any disproportionate representations and taking corrective actions.

- Use techniques like resampling, reweighting, and fairness constraints to ensure that the model learns from unbiased data.

Continuous Monitoring and Updating:

- Continuously monitor the performance of the AI system and update the data as needed. Real-world conditions change, and so should the data feeding into the model.

- Regularly validate the model’s predictions against real-world outcomes to ensure ongoing reliability and accuracy.

Conclusion

Using real, high-quality data is fundamental to the success of AI systems. It ensures accurate representation, improved generalization, and higher reliability. Conversely, poor-quality data can lead to biases, inaccurate predictions, and the realization of our greatest fears about AI’s potential to cause unintended harm. By prioritizing data quality, we can build AI systems that are trustworthy, effective, and aligned with ethical standards, thereby harnessing the true potential of AI for positive

🌟 Don’t miss this enlightening episode! Click the link to listen and join the conversation on the future of AI.

Dr. Bill 360 Episode 5: Understanding AI: https://youtu.be/n19F91KdZu4

The parallels between biological neurons and artificial perceptrons are fascinating! It’s incredible how AI mimics the human brain’s complexity. I’m curious, though—how do you think this technology will evolve in the next decade? Will it ever fully replicate human intuition? Also, the emphasis on high-quality data is crucial, but how do we ensure data authenticity in an era of misinformation? I’d love to hear your thoughts on the ethical implications of AI learning from potentially biased datasets. Do you think AI could ever surpass human decision-making, or will it always be a tool to enhance our capabilities? What’s your take on the balance between innovation and responsibility in AI development?

This episode really sheds light on the fascinating parallels between biological neurons and artificial perceptrons. It’s amazing how AI mimics the human brain’s functionality! I’ve always wondered how neural networks “learn” from data, and the explanation about backpropagation makes it clearer. The emphasis on high-quality data is crucial—garbage in, garbage out, right? But I’m curious, how do we ensure data authenticity in the real world where biases can creep in? Also, do you think AI will ever fully replicate human intuition, or will it always lack that “gut feeling”? What’s your take on the future of AI in decision-making? Let’s discuss! 🤔

This episode really sheds light on the fascinating parallels between biological neurons and artificial perceptrons. It’s incredible how AI mimics the human brain’s complexity to process information and make decisions. The explanation of backpropagation and how neural networks learn from data is both clear and thought-provoking. I’m curious, though, how do we ensure the ethical use of AI, especially when it comes to data authenticity and privacy? The potential of AI is immense, but it’s crucial to address these concerns to avoid misuse. What are your thoughts on balancing innovation with ethical considerations in AI development? Could you elaborate on how we can better regulate AI to ensure it benefits society as a whole?

The parallels between biological neurons and artificial perceptrons are fascinating! It’s incredible how AI mimics the brain’s natural processes to learn and make decisions. I’m curious, though—how do you think the limitations of biological neurons (like fatigue or errors) translate to artificial systems? Do artificial neurons face similar challenges, or are they more resilient? Also, the emphasis on high-quality data is crucial, but how do we ensure data authenticity in an era of misinformation? I wonder if there’s a risk of AI models inheriting biases from flawed datasets. What’s your take on balancing innovation with ethical considerations in AI development? Would love to hear your thoughts!

English.

AI’s ability to mimic biological neurons is fascinating, but how much can it truly replicate the complexity of the human brain? The parallels between neurons and perceptrons are intriguing, but I wonder if AI will ever reach a point where it can “think” like humans. The emphasis on high-quality data is crucial, but how do we ensure fairness and accuracy in the datasets we use? The potential of AI is immense, but are we fully considering the ethical implications? I’d love to hear more about how AI could evolve in the future—what’s the next big leap? Do you think AI will ever surpass human intelligence, or will it always remain a tool?

This episode really sheds light on the fascinating parallels between biological neurons and artificial perceptrons. It’s incredible how AI mimics the human brain’s complexity to process information and make decisions. The explanation of backpropagation and how neural networks learn from data is both clear and thought-provoking. I’m curious, though, how do we ensure the ethical use of AI, especially when it comes to data authenticity and privacy? The potential of AI is immense, but it’s crucial to address these concerns to avoid misuse. What are your thoughts on balancing innovation with ethical considerations in AI development? Would love to hear your perspective on this!

Thanks for the comments. When I hear the discussion about misuse, I think about guns. Guns don’t kill people, people do!

The parallels between biological neurons and artificial perceptrons are fascinating! It’s incredible how AI mimics the human brain’s complexity. I’m curious, though—how do you think this technology will evolve in the next decade? Will it ever fully replicate human intuition? Also, the emphasis on high-quality data is crucial, but how do we ensure data authenticity in an era of misinformation? I’d love to hear your thoughts on the ethical implications of AI learning from potentially biased datasets. Do you think AI could ever surpass human decision-making in critical fields like healthcare or justice? What’s your take on the balance between innovation and responsibility in AI development?

I see comparisons to humans, evolution, replication, authenticity, misinformation, implications, bias, surpass humans, and much more. I have responded to another such comment and the point was one that amounts to putting the cart before the horse. The horse in this case us coherent vision, goal, and plan for humanity. Many prestigious universities have done 100 year studies about the world will look like and need in several sectors relevant to humanity. Similarly, WWF has the same for the planet. This and more is more than enough to formulate that vision, the goal, and the plans. Only then can AI models have a chance at ‘unbiased’ and ethical decision-making. At this point, I would hope that AI does not mimic the human brain as we’ve not done so well– have we? Waiting…

This episode really sheds light on the fascinating parallels between biological neurons and artificial perceptrons. It’s incredible how AI mimics the human brain’s complexity to process information. The explanation of backpropagation and how neural networks learn from data is both clear and thought-provoking. I’m curious, though, how do we ensure the ethical use of AI, especially when it comes to data authenticity? The potential of AI is immense, but it’s crucial to address the challenges of bias and data quality. What steps can we take to make AI more transparent and accountable? How do you see AI evolving in the next decade, and what role will ethical considerations play in its development?

Thanks for the question. Everyone has history, context, and thus bias. That’s why in certain manuscripts, e.g., U.S. dissertations, it’s a requirement to state your worldview or philosophy. I’d call that transparency; that way, when people read your dissertation, they also have context. I say this because many people are working on AI and without consensus on a vision, goals, and objectives for the global community of citizens and the way– it’s nearly impossible to know, understand, and apply ethics to a vision or goal that does not exist. I mean, written down and then detailed descriptions by consensus on what the terms mean. Then, one formulates a strategy born of a real-world process and ready for assessment, evaluation, feedback, etc. It’s not the AI per se, but a lack of vision by consensus. As a retired military officer, I learned early on that when chaos exists (no plan, confusion, anxiety, etc.), it is the duty and obligation of leadership to ‘pull it straight’ (pull the crooked noodle of chaos straight). It doesn’t mean one just does something, but they take inputs and make it happen. So, long story short– this is a leadership problem and not an AI problem, in my opinion.